LMS is advancing human-robot interaction using AI – a large language model (LLM) approach

Human-Robot Interaction (HRI) plays a crucial role in enabling seamless collaboration between humans and robots, leading to increased productivity, efficiency, and safety in production processes. Robots equipped with advanced sensing capabilities can work alongside humans, performing repetitive and physically demanding tasks, while humans focus on more complex and strategic aspects of production. Several concepts have been presented so far involving a variety of h/w and information exchange means, like gestures, physical and virtual buttons and voice commands. Voice commands and audio feedback are, many times, perceived as the preferred means of communication, as the hands of the operator do not need to be occupied. The main drawback though, apart from others, that limits the fluidity of the interaction is that the commands are pre-programmed and have to be memorized.

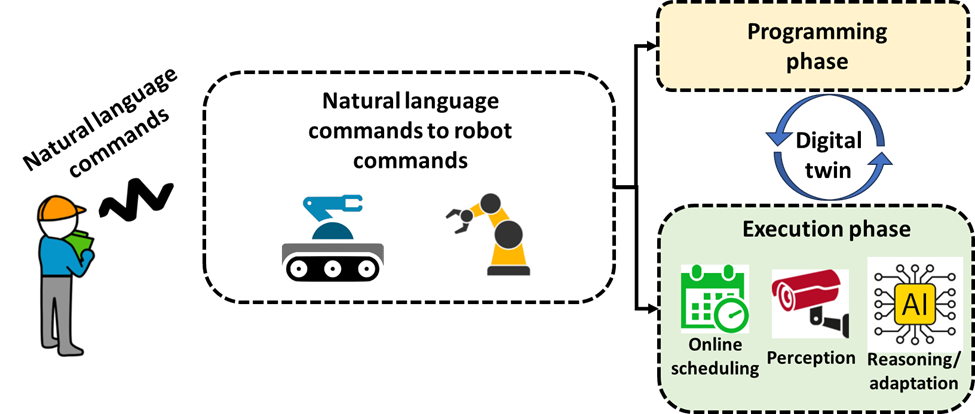

To this end, the Laboratory for Manufacturing Systems and Automation (LMS) develops a framework to advance HRI in both execution and programming phases, through the use of AI, exploring large language models (LLMs), allowing humans to communicate with robots, and vice versa, in natural language. The proposed framework leverages natural language commands for human-robot interaction in two phases: programming and execution. It supports robotic arm and mobile robot commands, presenting a comprehensive approach to HRI in manufacturing. During the programming phase it allows the operator to teach precise target points, configure action order flexibility, and store points. In the execution phase, the robot program serves as a reference, enabling deviation based on operator needs communicated verbally or detected automatically though the use of sensors. The current implementation is built upon the OpenAI framework.

The overarching goal is to enable robots to interpret and execute intricate commands, achieved through integration with technologies like computer vision, artificial intelligence, and LLMs. This empowers the framework to understand complex instructions involving diverse attributes such as cable details, connector evaluation, screw types, and electronic component types, etc. The solution will be demonstrated in physical setups involving use cases from white goods, automotive, aerospace and additive manufacturing cases in context of CONVERGING EU Project.